GenAI in Law:

A Guide to Building Trust

Establishing trust can unlock remarkable benefits for firms that embrace legal AI

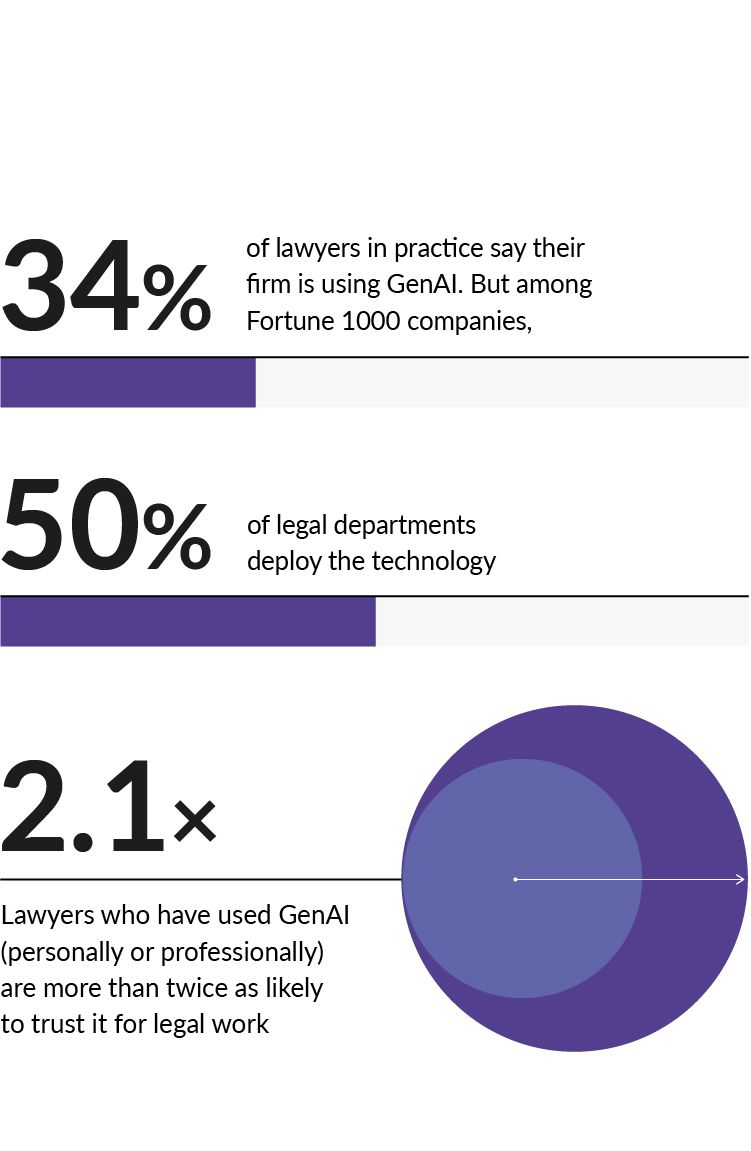

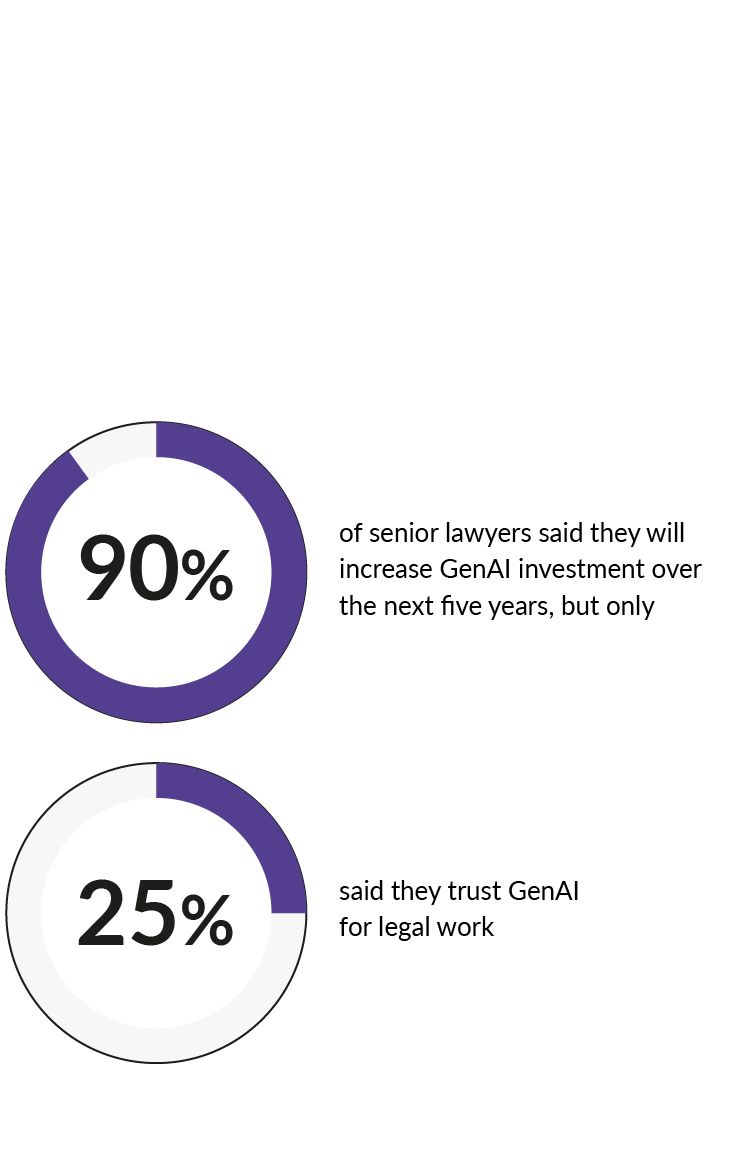

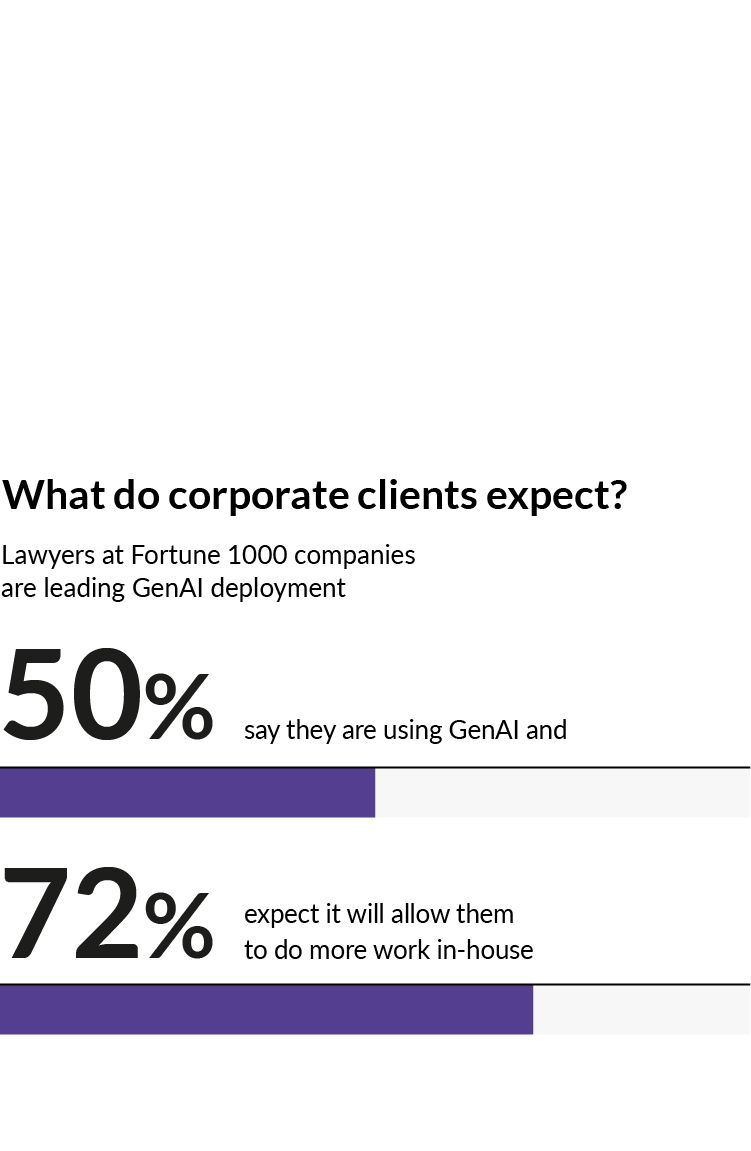

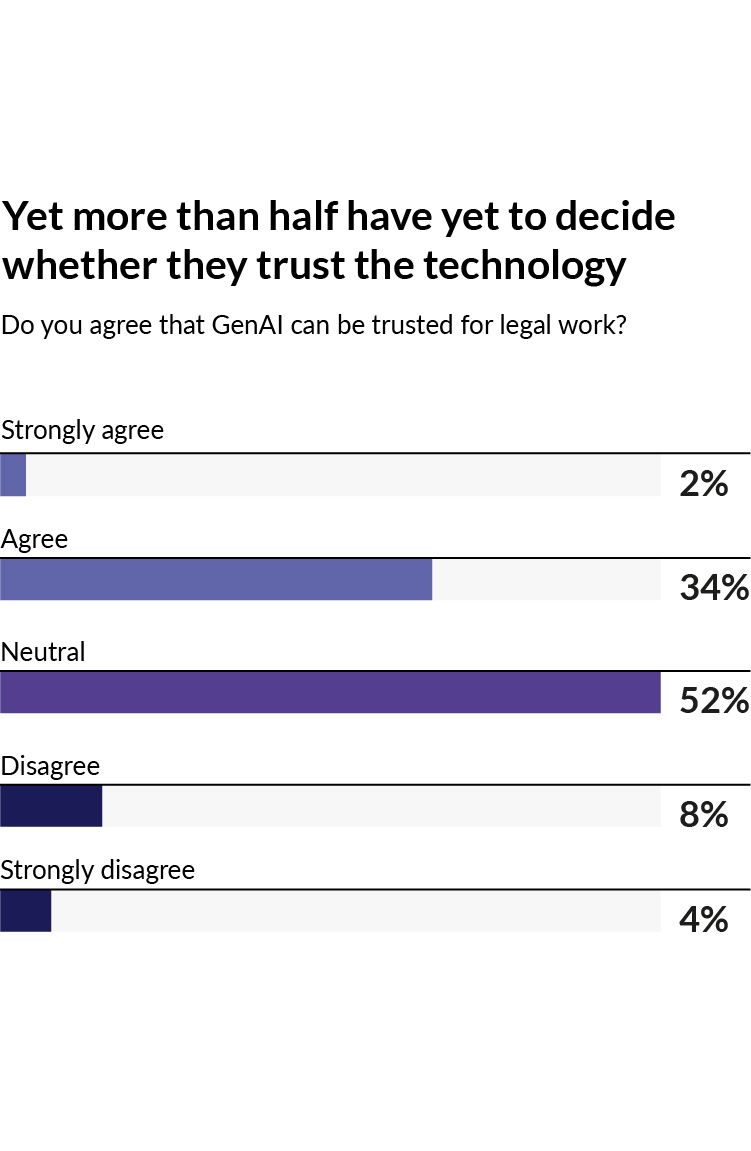

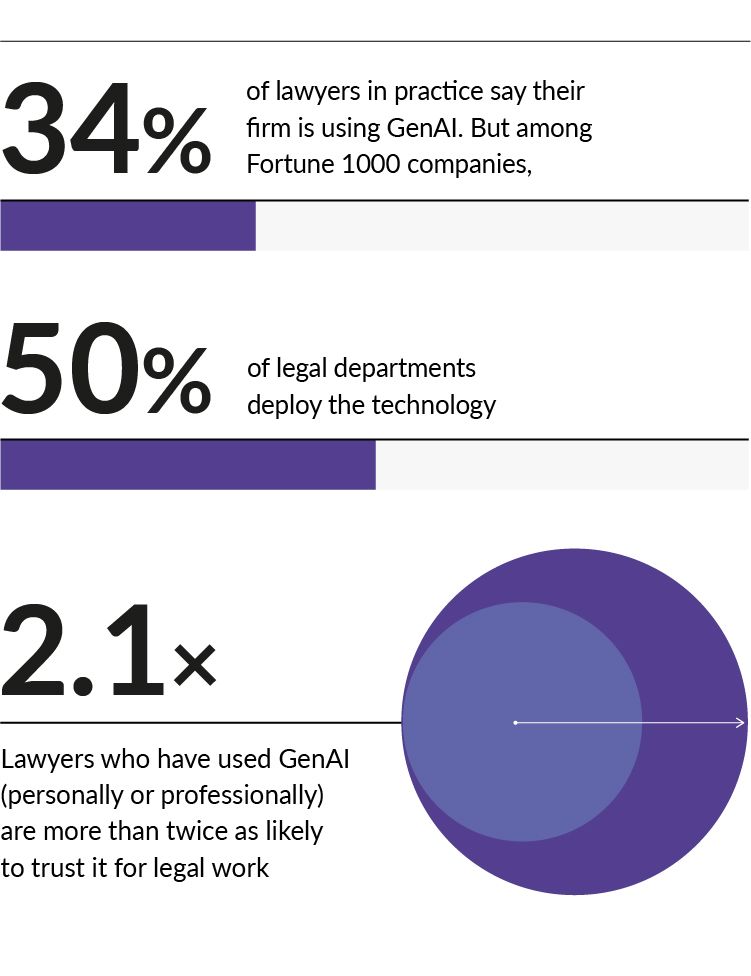

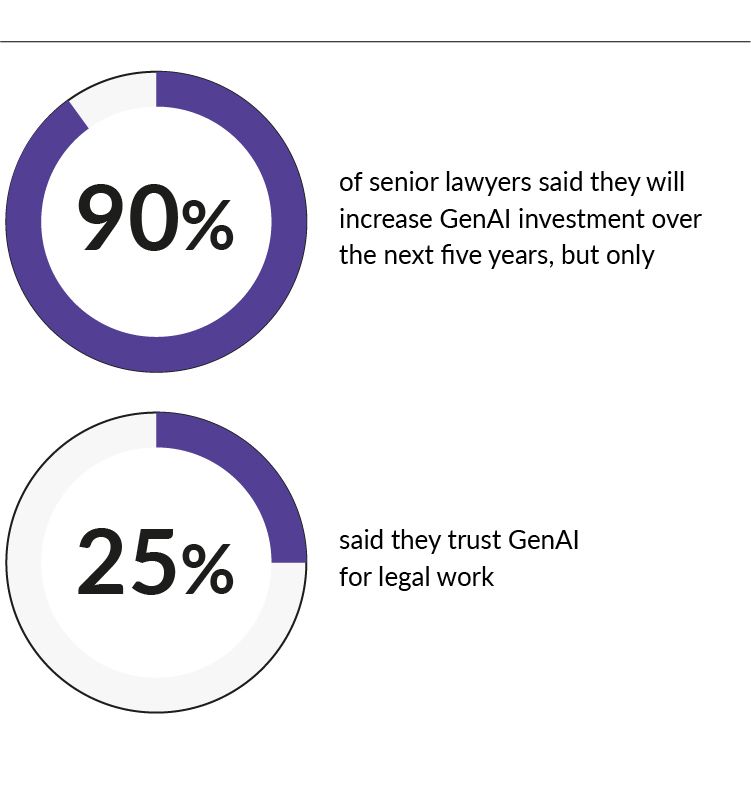

A legal services revolution is on the horizon, with Generative AI (GenAI) poised to transform how lawyers work by automating routine tasks and enabling them to focus on more complex matters. But there’s a snag: lawyers don’t quite trust the technology yet. According to the LexisNexis Investing in Legal Innovation Survey, 37% of senior lawyers say their firms use AI already, but only 25% actually trust it to handle legal work.

To close this trust gap, firms must build their lawyers’ and their clients’ confidence in GenAI tools. This can be accomplished through a combination of education, hands-on training and practical experience. By taking an informed, measured approach, GenAI can help unlock substantial benefits for law firms and in-house legal departments, transforming legal work by creating efficiencies and opening new revenue streams.

This report outlines how firms can build trust in GenAI and overcome concerns so that the technology can be integrated into workflows, enabling attorneys to work faster and smarter and allowing firms to innovate and ultimately compete more effectively.

A legal services revolution is on the horizon, with Generative AI (GenAI) poised to transform how lawyers work by automating routine tasks and enabling them to focus on more complex matters. But there’s a snag: lawyers don’t quite trust the technology yet. According to the LexisNexis Investing in Legal Innovation Survey, 37% of senior lawyers say their firms use AI already, but only 25% actually trust it to handle legal work.

To close this trust gap, firms must build their lawyers’ and their clients’ confidence in GenAI tools. This can be accomplished through a combination of education, hands-on training and practical experience. By taking an informed, measured approach, GenAI can help unlock substantial benefits for law firms and in-house legal departments, transforming legal work by creating efficiencies and opening new revenue streams.

This report outlines how firms can build trust in GenAI and overcome concerns so that the technology can be integrated into workflows, enabling attorneys to work faster and smarter and allowing firms to innovate and ultimately compete more effectively.

What drives trust?

Allowing lawyers to test new GenAI tools can help boost faith in the technology

The emergence of GenAI technology is a step change for the business world that will be as transformative as the advent of the internet. With innovation advancing at a rapid clip, law firms must ensure they are not left behind. Some legal leaders like Greg Lambert, Chief Knowledge Services Officer at Jackson Walker have likened this to a boat that is leaving the dock—the longer firms wait to get on board, the further it will be out to sea and the harder it will be to catch before it disappears over the horizon.

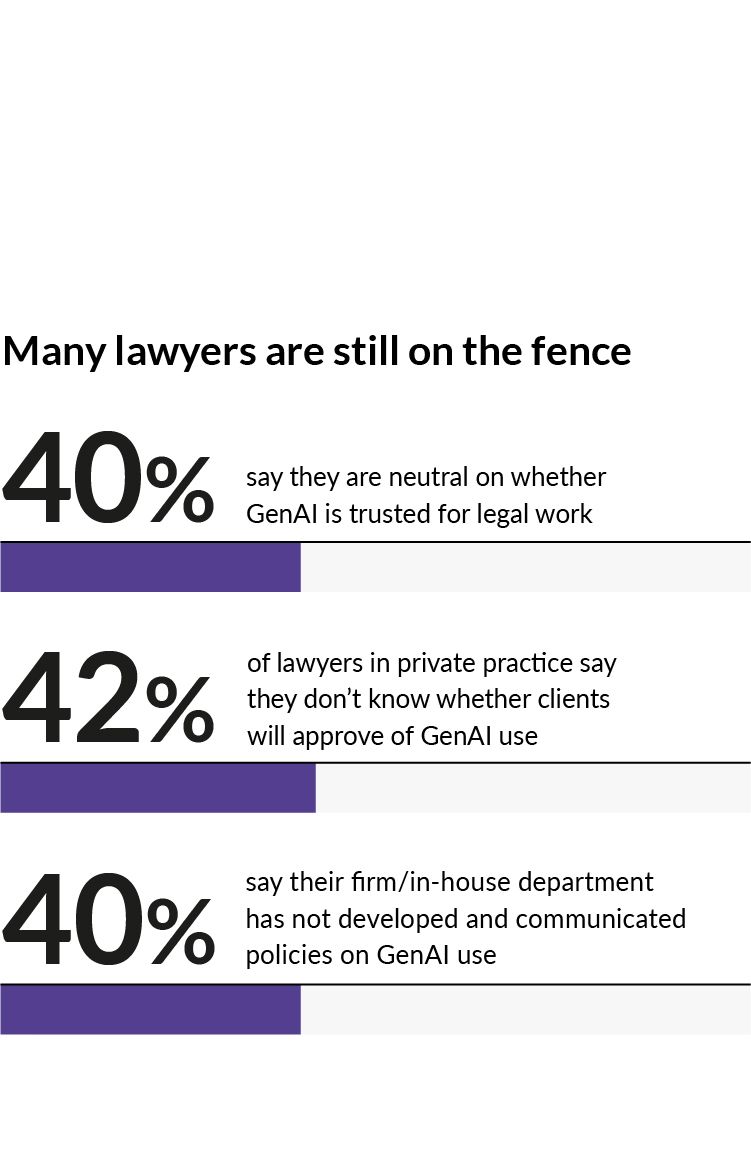

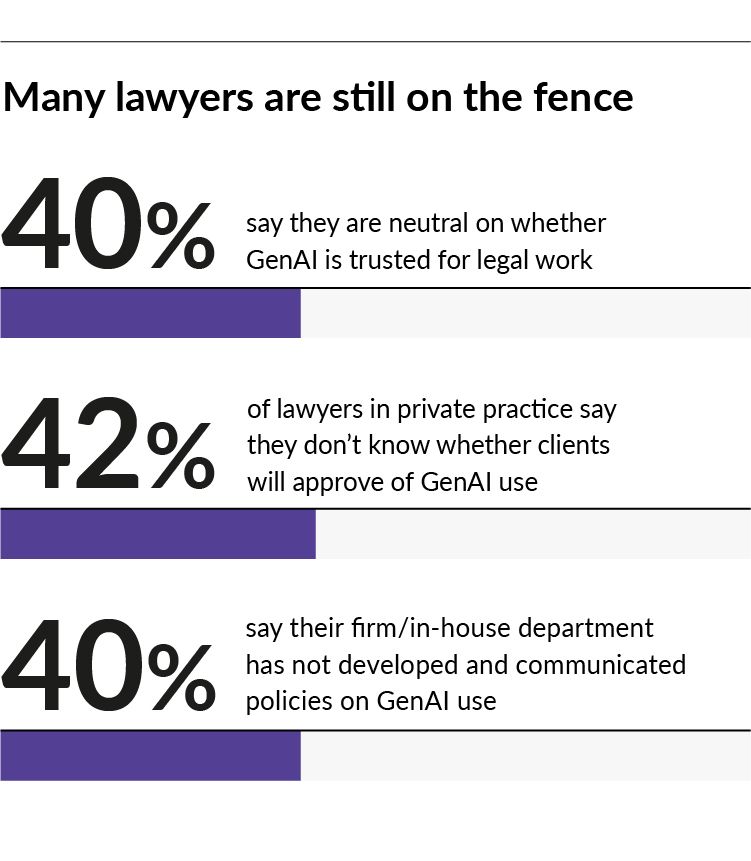

Despite this sense of urgency, there is still some hesitancy among legal professionals about setting sail on this voyage. Views on AI are still very much in flux: the survey data shows only one in 10 lawyers have strong opinions on AI adoption either way. As the AI boat prepares to depart, many firms are keeping one foot onshore as they explore the potential capabilities of what GenAI can offer.

“We are knee-deep in testing GenAI tools,” says Peter Geovanes, Chief Innovation and AI Officer at McGuireWoods. “My favorite catchphrase that we tell our clients is we’re going to do this with ‘“R.E.M.’” which stands for ‘“responsible, ethical and measured’” approach, meaning we have success criteria, we have use cases, we’re engaging with the attorneys, and we’re collecting metrics and feedback. We’re in a trust but verify mode—that’s how we build trust with the tool.”

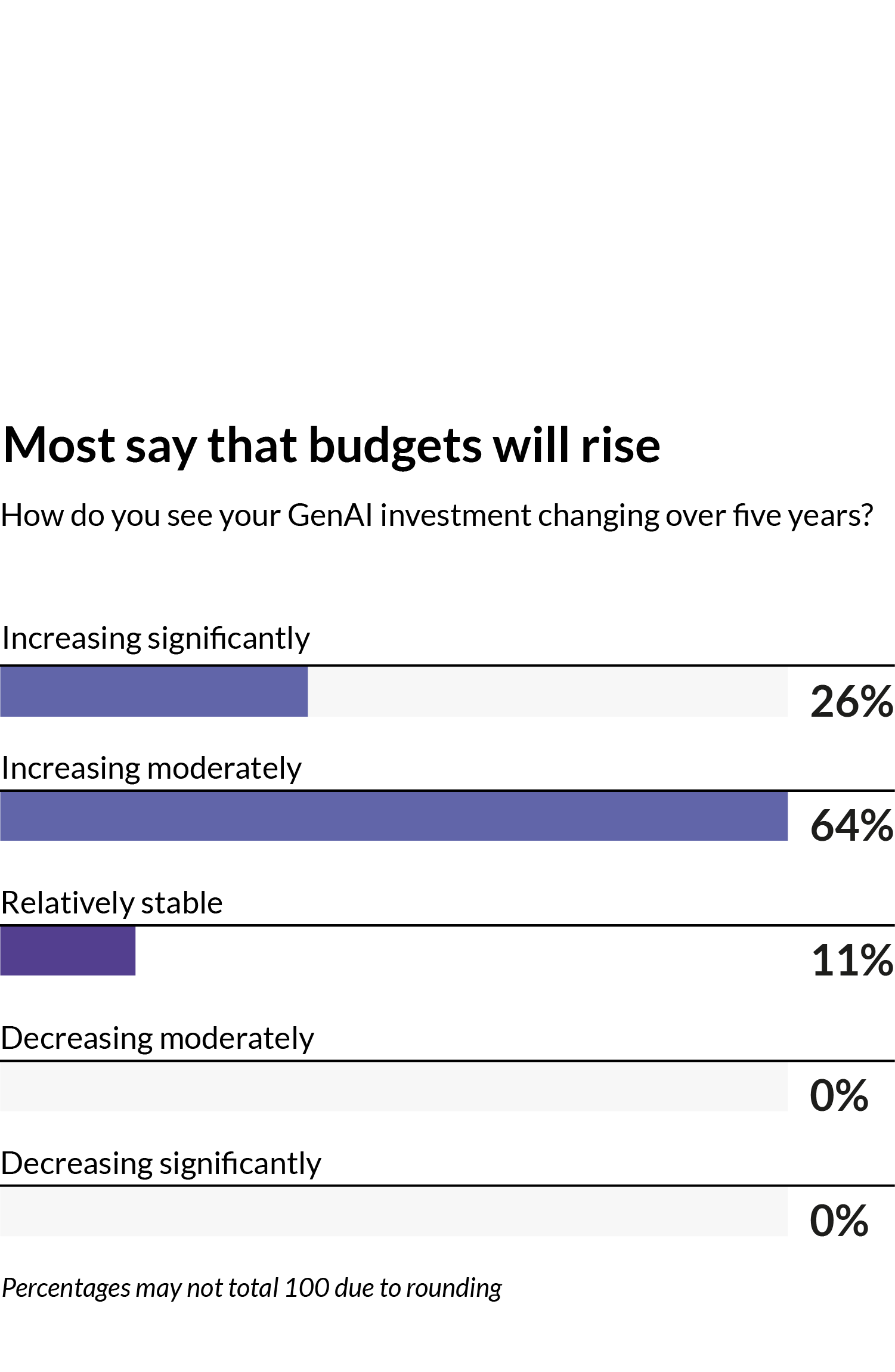

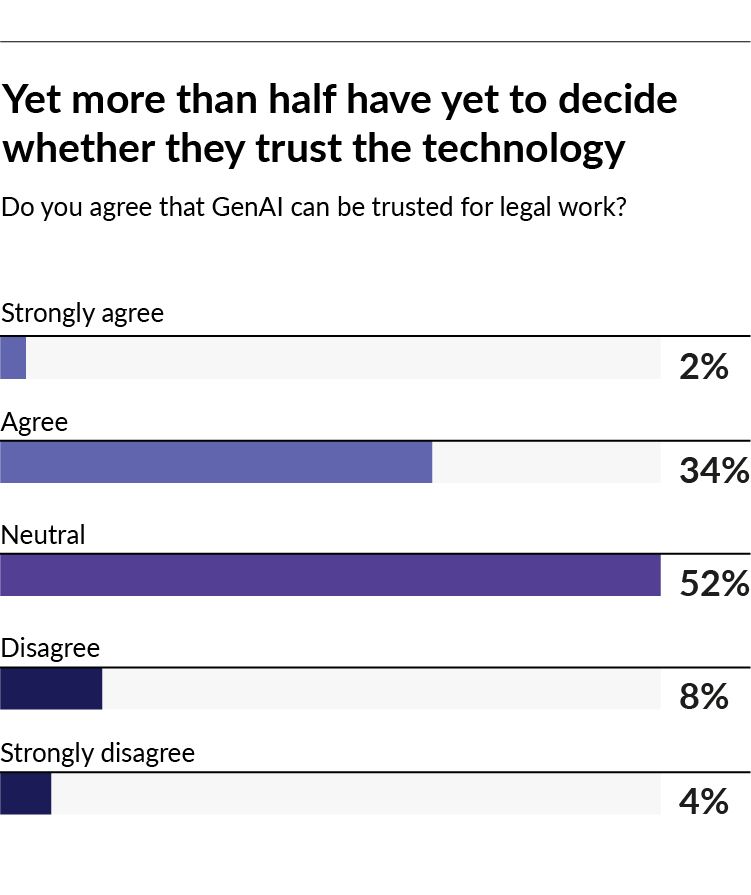

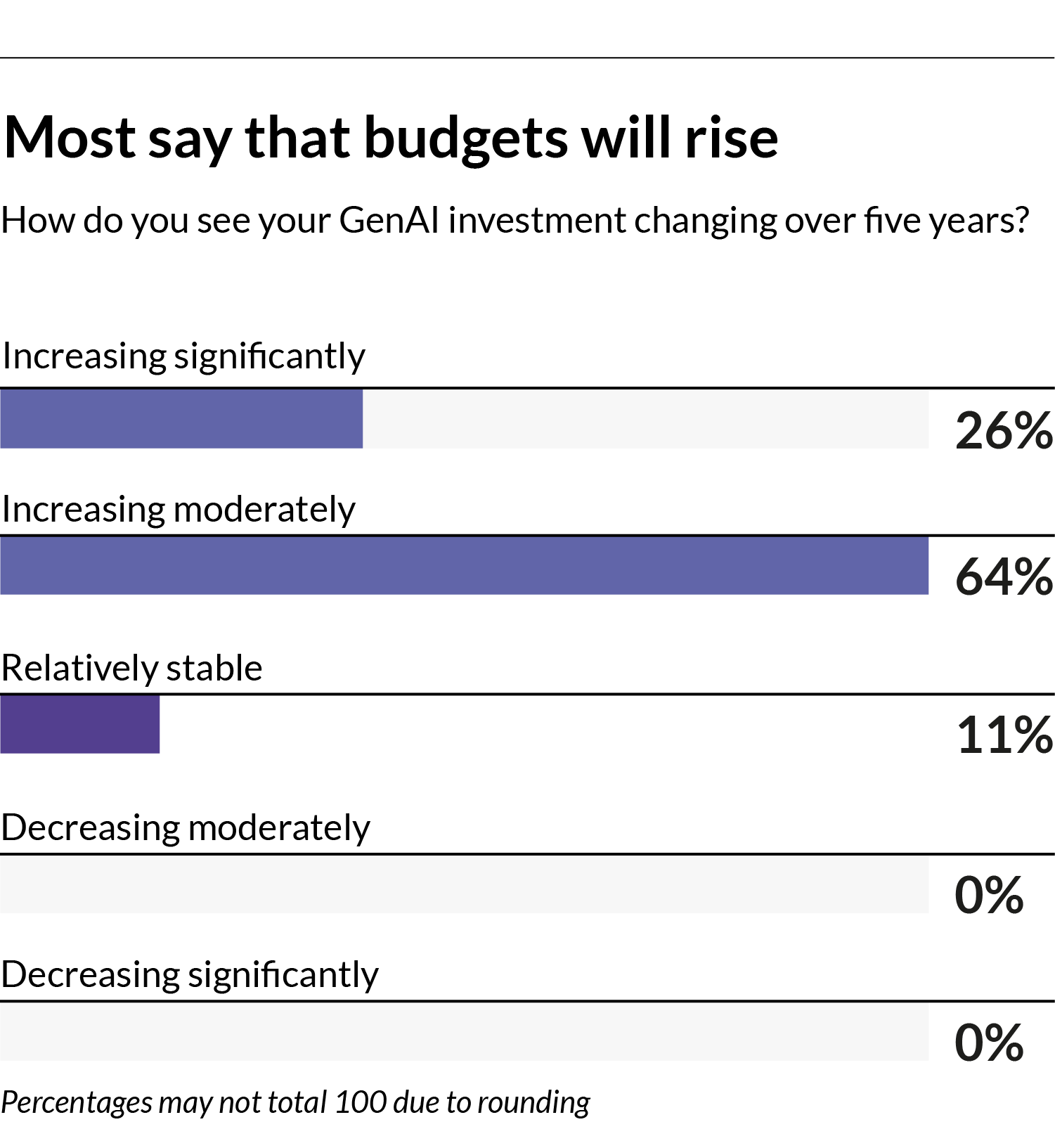

Building trust must be a central theme for firms that want to adopt GenAI tools. While most respondents (90%) say their firms will increase GenAI investment over the next five years, only a quarter say they trust the technology for performing legal work.

While some of that lack of trust may be down to a small number of negative press headlines, many lawyers approach AI-generated content in a similar way to how they review work completed by newly qualified associates—firms have a responsibility to check the accuracy of the work in exactly the same way or risk malpractice liability.

“There have been plenty of reports in the news and elsewhere as to the various ways that lawyers and law firms have gotten themselves into trouble by using generative AI without completely understanding the risks associated with it,” says Neil Posner, a Principal at Chicago firm Much Shelist.

This has also created significant skepticism among judges, who in some cases are requiring lawyers to certify either that they are not using GenAI or they have read everything that they have cited, Posner says.

A lot of that may be “reflective of the fact that judges themselves don’t completely understand, at least not yet, what generative AI can do and what the risks are”, he says.

Trust, therefore, is often informed by the level of exposure that legal practitioners have previously had to the technology. For example, the survey showed that senior lawyers were more than twice as likely to trust GenAI for legal work if they had already used it in a non-work capacity.

“The change management process is aided most by getting hands-on experience with using the tools,” says Jeff Pfeifer, Chief Product Officer at LexisNexis. “The more a lawyer has access to the tools, the more confident and comfortable that they get with interacting with the underlying capabilities.”

As well as concerns around trust, 74% of lawyers are also concerned about the risk of so-called “hallucinations”, when AI tools invent answers to questions and present them as fact. Last June, for example, two US lawyers received a court fine for citing fake legal research that had been generated by OpenAI’s ChatGPT platform.

“There is a genuine trust risk in using tools that are not tuned and tailored for legal market use cases,” says Pfeifer. “We are seeing a growing number of tools that are bespoke for the legal market and these tools are likely to yield a high return for lawyers because they are designed to mitigate the risks that we’ve seen in general AI services.”

While exposure to AI tools that are made specifically for the legal industry may help build confidence, there is no shortcut to getting lawyers to a point where they trust AI-generated content.

“I look at it mostly through the lens of working with a smart intern, and the way you build trust with an intern is over time—there’s simply no other way to do it,” says Tod Cohen, a Partner at Steptoe. “You can rely on all the assurances and assumptions you want, but just as when you are working with an intern, until you trust them you are always going to need to check and re-check their work, knowing that they are limited.”

For in-house teams, building trust in AI more broadly is not just about getting lawyers comfortable but also the wider business. Take insurance company Liberty Mutual, for example.

“Risk aversion was a real hurdle that we had to get over in order to get to the point where we are today where we have fully integrated AI into our audit process,” says Christy Jo Gedney, a Senior Manager at Liberty Mutual. “So for us to build trust, we started by having our teams meet together and begin that education process. Because of our risk aversion, we took a very conservative approach to beginning the process of using AI.”

The emergence of GenAI technology is a step change for the business world that will be as transformative as the advent of the internet. With innovation advancing at a rapid clip, law firms must ensure they are not left behind. Some legal leaders like Greg Lambert, Chief Knowledge Services Officer at Jackson Walker have likened this to a boat that is leaving the dock—the longer firms wait to get on board, the further it will be out to sea and the harder it will be to catch before it disappears over the horizon.

Despite this sense of urgency, there is still some hesitancy among legal professionals about setting sail on this voyage. Views on AI are still very much in flux: the survey data shows only one in 10 lawyers have strong opinions on AI adoption either way. As the AI boat prepares to depart, many firms are keeping one foot onshore as they explore the potential capabilities of what GenAI can offer.

“We are knee-deep in testing GenAI tools,” says Peter Geovanes, Chief Innovation and AI Officer at McGuireWoods. “My favorite catchphrase that we tell our clients is we’re going to do this with ‘“R.E.M.’” which stands for ‘“responsible, ethical and measured’” approach, meaning we have success criteria, we have use cases, we’re engaging with the attorneys, and we’re collecting metrics and feedback. We’re in a trust but verify mode—that’s how we build trust with the tool.”

Building trust must be a central theme for firms that want to adopt GenAI tools. While most respondents (90%) say their firms will increase GenAI investment over the next five years, only a quarter say they trust the technology for performing legal work.

While some of that lack of trust may be down to a small number of negative press headlines, many lawyers approach AI-generated content in a similar way to how they review work completed by newly qualified associates—firms have a responsibility to check the accuracy of the work in exactly the same way or risk malpractice liability.

“There have been plenty of reports in the news and elsewhere as to the various ways that lawyers and law firms have gotten themselves into trouble by using generative AI without completely understanding the risks associated with it,” says Neil Posner, a Principal at Chicago firm Much Shelist.

This has also created significant skepticism among judges, who in some cases are requiring lawyers to certify either that they are not using GenAI or they have read everything that they have cited, Posner says.

A lot of that may be “reflective of the fact that judges themselves don’t completely understand, at least not yet, what generative AI can do and what the risks are”, he says.

Trust, therefore, is often informed by the level of exposure that legal practitioners have previously had to the technology. For example, the survey showed that senior lawyers were more than twice as likely to trust GenAI for legal work if they had already used it in a non-work capacity.

“The change management process is aided most by getting hands-on experience with using the tools,” says Jeff Pfeifer, Chief Product Officer at LexisNexis. “The more a lawyer has access to the tools, the more confident and comfortable that they get with interacting with the underlying capabilities.”

As well as concerns around trust, 74% of lawyers are also concerned about the risk of so-called “hallucinations”, when AI tools invent answers to questions and present them as fact. Last June, for example, two US lawyers received a court fine for citing fake legal research that had been generated by OpenAI’s ChatGPT platform.

“There is a genuine trust risk in using tools that are not tuned and tailored for legal market use cases,” says Pfeifer. “We are seeing a growing number of tools that are bespoke for the legal market and these tools are likely to yield a high return for lawyers because they are designed to mitigate the risks that we’ve seen in general AI services.”

While exposure to AI tools that are made specifically for the legal industry may help build confidence, there is no shortcut to getting lawyers to a point where they trust AI-generated content.

“I look at it mostly through the lens of working with a smart intern, and the way you build trust with an intern is over time—there’s simply no other way to do it,” says Tod Cohen, a Partner at Steptoe. “You can rely on all the assurances and assumptions you want, but just as when you are working with an intern, until you trust them you are always going to need to check and re-check their work, knowing that they are limited.”

For in-house teams, building trust in AI more broadly is not just about getting lawyers comfortable but also the wider business. Take insurance company Liberty Mutual, for example.

“Risk aversion was a real hurdle that we had to get over in order to get to the point where we are today where we have fully integrated AI into our audit process,” says Christy Jo Gedney, a Senior Manager at Liberty Mutual. “So for us to build trust, we started by having our teams meet together and begin that education process. Because of our risk aversion, we took a very conservative approach to beginning the process of using AI.”

The trust issue in detail

Address concerns about accuracy and confidentiality—here’s how

Building trust in GenAI will mean resolving concerns in several areas—from issues involving accuracy and security, to ensuring clients are comfortable with how firms are using AI to deliver legal services.

As outlined in the previous section, some lawyers are concerned about the potential for AI to provide hallucinated answers. Bolstering confidence in the accuracy of AI-generated content is therefore crucial for lawyers to trust what they are reading. This is where specialized legal GenAI tools can help.

“Companies like LexisNexis ensure that answers are generated with the appropriate source citations and references,” says Pfeifer. “Doing so allows an individual to trust the answer quality and that the answers are backed by appropriate legal authority.”

For example, LexisNexis has built a GenAI-based research solution—Lexis+ AI—which grounds an AI service in an underlying legal content database that understands and optimizes prompts and retrieves and ranks relevant source content to generate answers based on that authoritative material. References are included in the text so that users can check the sources themselves.

Another key consideration for firms is ensuring data security to uphold client confidentiality requirements. This means ensuring contracts with third-party GenAI providers don’t allow firm data to be shared with the provider, a provision that open GenAI companies often include in their terms and conditions on the basis that sharing data will improve their service.

“GenAI are data-intensive tools. They’re like the ravenous plant from The Little Shop of Horrors—they always need to be fed,” says Cohen. “For a law firm, that’s really a question of what are we feeding the tools with and how do we make sure that the tool isn’t being fed with confidential and proprietary data and then is reused by other clients and potentially by other downstream users inside and outside of the firm? That’s really the most difficult part.”

Ensuring data-sharing clauses are removed from contracts can provide reassurance of confidentiality, while improvements in commercial-grade cloud infrastructure have also made using GenAI much more secure than earlier generations of the technology.

“We spend significant time working with our clients to help them understand the technology infrastructure and the extensive steps that we’ve taken to ensure that their experiences are highly secure and highly confidential,” says Pfeifer.

As a consequence, LexisNexis has made data security and privacy for customers a priority by opting out of certain Microsoft AI monitoring features to ensure OpenAI cannot access or retain confidential customer data.

Firms can also increase confidence in GenAI tools by putting in place policies and guidelines that govern how the tech can be used.

Posner at Much Shelist says that because he is very focused on the firm’s risk management, the potential for attorneys to improperly use open GenAI tools is something that keeps him awake at night. However, those concerns can be eased by erecting appropriate guardrails.

“We don’t want to dissuade them from using it if they can use it thoughtfully and safely and are aware of what the risks are,” he says.

Aside from not entering client information that could compromise confidentiality, those policies should also ensure attorneys are fact-checking any content provided.

“Whatever you get back, you still have to use your own judgment,” says Posner. “AI is just a tool.”

Therefore, he says, it is no different than using other legal research tools where if an attorney is citing a case, they must read and understand the content if they want to avoid the risk of committing malpractice.

Reed Smith’s Chief Financial Officer, James Metzger, says all its AI initiatives are run through the firm’s AI governance process, which includes not only security protocols but also considerations of jurisdictional compliance, information governance, and client requirements. This is to ensure these systems are integrated into the firm’s development and quality assurance expectations via organized pilots, to avoid attorneys experimenting without guardrails.

Firm policies may also be shaped by clients who have their own AI guidelines about what is permissible and what they expect their legal services providers to disclose on AI use, says Pfeifer.

“Leading firms around the world are exploring synthesizing those two things together, so they’re able to incorporate guidance from clients with their own internal processes to create detailed, documented playbooks. These AI playbooks can guide how generative AI should be tested, leveraged, experimented with, and then eventually applied to actual workflow processes” Pfeifer adds.

Some lawyers believe that placing overly restrictive limits on GenAI use may backfire: lawyers will likely use the technology anyway, but with the added risk that they adopt tools that are not geared for legal work.

“I’m not an advocate for special rules for AI,” says Cohen. “You just need the simple common-sense guidelines that you already have in place for the use of any type of technology in the workplace.”

Any guardrails that are put in place should be crafted for each specific use case rather than being applied generally.

“If it’s a litigation use case, you’re likely to be very concerned about privilege, especially in the US where you have a lot of privilege issues around the use of third-party tools and whether or not they in any way compromise privilege,” Cohen adds.

Reassuring clients on AI use is also no different than previous examples of tech adoption in a legal setting.

“I see this as a logical progression where both sides—in-house corporate legal departments and the law firms—get comfortable with a new technology. They are exploring concerns and having conversations about what use of that technology looks like in daily practice,” says Pfeifer.

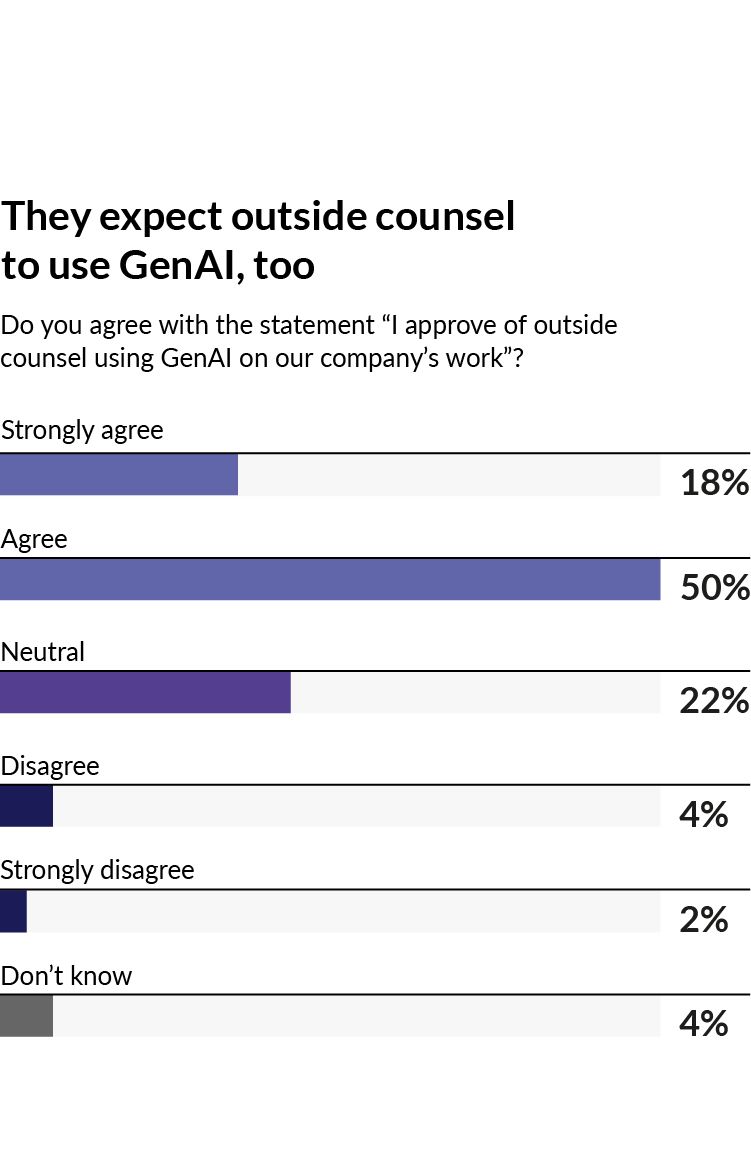

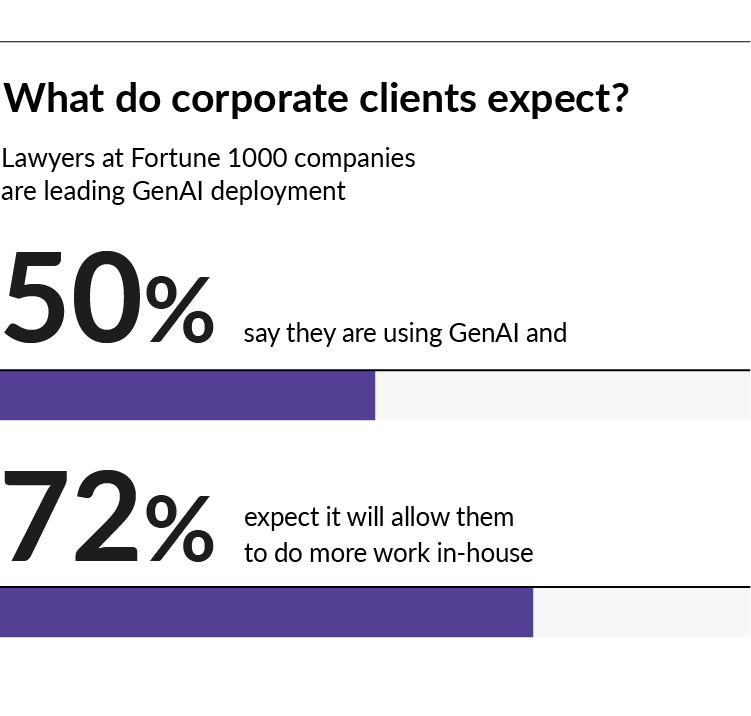

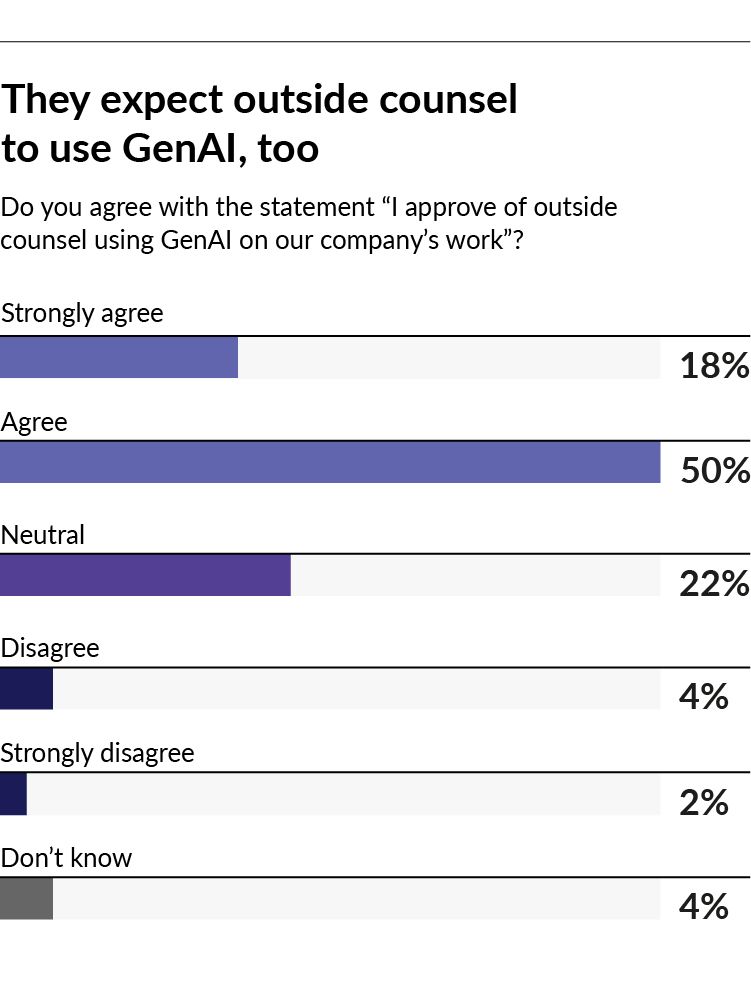

Clients also increasingly expect firms to use AI to deliver efficiency benefits, with many in-house teams seeking information on AI use when they issue a request for proposal.

Geovanes explains, “I take that information to our partnership not as my personal endorsement of these technologies and tools, but as a direct request from our clients. They’re the ones insisting that we explore ways to enhance productivity and creativity in order to reduce expenses and improve the legal services we offer to them.”

Some in-house teams not only expect their law firms to use AI tools, they are also helping guide them on that journey.

“We are now starting to host AI vendor showcases with our panel law firms and give them exposure to the same vendors that we've been bringing in house as well,” says Gedney.

Some firms such as Steptoe & Johnson PLLC have invested in legal AI tools but are holding back from using generative AI on client work until they have confidence in using the technology internally.

“The firm prohibits our attorneys from using it directly with clients while we are in a testing environment,” says Krista Ford, Director of Knowledge, Research and Information Services at Steptoe & Johnson. “So even though it's been rolled out firm-wide, it's still not rolled out with clients.”

Even when it is ready for client consumption, Ford believes that the tech will likely be rolled out gradually for certain use cases. For now, there is still some uncertainty among clients about what GenAI can offer and the extent of its capabilities.

“There is a buzz in the market—everyone is talking about AI,” says Ford. “Some of our clients are terrified of it and don’t want anything to do with it, and other ones want us to be able to do things that aren’t even developed in the market yet.”

Building trust in GenAI will mean resolving concerns in several areas—from issues involving accuracy and security, to ensuring clients are comfortable with how firms are using AI to deliver legal services.

As outlined in the previous section, some lawyers are concerned about the potential for AI to provide hallucinated answers. Bolstering confidence in the accuracy of AI-generated content is therefore crucial for lawyers to trust what they are reading. This is where specialized legal GenAI tools can help.

“Companies like LexisNexis ensure that answers are generated with the appropriate source citations and references,” says Pfeifer. “Doing so allows an individual to trust the answer quality and that the answers are backed by appropriate legal authority.”

For example, LexisNexis has built a GenAI-based research solution—Lexis+ AI—which grounds an AI service in an underlying legal content database that understands and optimizes prompts and retrieves and ranks relevant source content to generate answers based on that authoritative material. References are included in the text so that users can check the sources themselves.

Another key consideration for firms is ensuring data security to uphold client confidentiality requirements. This means ensuring contracts with third-party GenAI providers don’t allow firm data to be shared with the provider, a provision that open GenAI companies often include in their terms and conditions on the basis that sharing data will improve their service.

“GenAI are data-intensive tools. They’re like the ravenous plant from The Little Shop of Horrors—they always need to be fed,” says Cohen. “For a law firm, that’s really a question of what are we feeding the tools with and how do we make sure that the tool isn’t being fed with confidential and proprietary data and then is reused by other clients and potentially by other downstream users inside and outside of the firm? That’s really the most difficult part.”

Ensuring data-sharing clauses are removed from contracts can provide reassurance of confidentiality, while improvements in commercial-grade cloud infrastructure have also made using GenAI much more secure than earlier generations of the technology.

“We spend significant time working with our clients to help them understand the technology infrastructure and the extensive steps that we’ve taken to ensure that their experiences are highly secure and highly confidential,” says Pfeifer.

As a consequence, LexisNexis has made data security and privacy for customers a priority by opting out of certain Microsoft AI monitoring features to ensure OpenAI cannot access or retain confidential customer data.

Firms can also increase confidence in GenAI tools by putting in place policies and guidelines that govern how the tech can be used.

Posner at Much Shelist says that because he is very focused on the firm’s risk management, the potential for attorneys to improperly use open GenAI tools is something that keeps him awake at night. However, those concerns can be eased by erecting appropriate guardrails.

“We don’t want to dissuade them from using it if they can use it thoughtfully and safely and are aware of what the risks are,” he says.

Aside from not entering client information that could compromise confidentiality, those policies should also ensure attorneys are fact-checking any content provided.

“Whatever you get back, you still have to use your own judgment,” says Posner. “AI is just a tool.”

Therefore, he says, it is no different than using other legal research tools where if an attorney is citing a case, they must read and understand the content if they want to avoid the risk of committing malpractice.

Reed Smith’s Chief Financial Officer, James Metzger, says all its AI initiatives are run through the firm’s AI governance process, which includes not only security protocols but also considerations of jurisdictional compliance, information governance, and client requirements. This is to ensure these systems are integrated into the firm’s development and quality assurance expectations via organized pilots, to avoid attorneys experimenting without guardrails.

Firm policies may also be shaped by clients who have their own AI guidelines about what is permissible and what they expect their legal services providers to disclose on AI use, says Pfeifer.

“Leading firms around the world are exploring synthesizing those two things together, so they’re able to incorporate guidance from clients with their own internal processes to create detailed, documented playbooks. These AI playbooks can guide how generative AI should be tested, leveraged, experimented with, and then eventually applied to actual workflow processes” Pfeifer adds.

Some lawyers believe that placing overly restrictive limits on GenAI use may backfire: lawyers will likely use the technology anyway, but with the added risk that they adopt tools that are not geared for legal work.

“I’m not an advocate for special rules for AI,” says Cohen. “You just need the simple common-sense guidelines that you already have in place for the use of any type of technology in the workplace.”

Any guardrails that are put in place should be crafted for each specific use case rather than being applied generally.

“If it’s a litigation use case, you’re likely to be very concerned about privilege, especially in the US where you have a lot of privilege issues around the use of third-party tools and whether or not they in any way compromise privilege,” Cohen adds.

Reassuring clients on AI use is also no different than previous examples of tech adoption in a legal setting.

“I see this as a logical progression where both sides—in-house corporate legal departments and the law firms—get comfortable with a new technology. They are exploring concerns and having conversations about what use of that technology looks like in daily practice,” says Pfeifer.

Clients also increasingly expect firms to use AI to deliver efficiency benefits, with many in-house teams seeking information on AI use when they issue a request for proposal.

Geovanes explains, “I take that information to our partnership not as my personal endorsement of these technologies and tools, but as a direct request from our clients. They’re the ones insisting that we explore ways to enhance productivity and creativity in order to reduce expenses and improve the legal services we offer to them.”

Some in-house teams not only expect their law firms to use AI tools, they are also helping guide them on that journey.

“We are now starting to host AI vendor showcases with our panel law firms and give them exposure to the same vendors that we've been bringing in house as well,” says Gedney.

Some firms such as Steptoe & Johnson PLLC have invested in legal AI tools but are holding back from using Generative AI (GenAI) on client work until they have confidence in using the technology internally.

“The firm prohibits our attorneys from using it directly with clients while we are in a testing environment,” says Krista Ford, Director of Knowledge, Research and Information Services at Steptoe & Johnson. “So even though it's been rolled out firm-wide, it's still not rolled out with clients.”

Even when it is ready for client consumption, Ford believes that the tech will likely be rolled out gradually for certain use cases. For now, there is still some uncertainty among clients about what GenAI can offer and the extent of its capabilities.

“There is a buzz in the market—everyone is talking about AI,” says Ford. “Some of our clients are terrified of it and don’t want anything to do with it, and other ones want us to be able to do things that aren’t even developed in the market yet.”

The steps to building trust

Pilot projects can be a great way to introduce GenAI tools and build grassroots support

Forward-thinking law firms are not waiting to see how GenAI could be adopted in their practices—they are already experimenting with its ability to change the way they work and deliver legal services to clients.

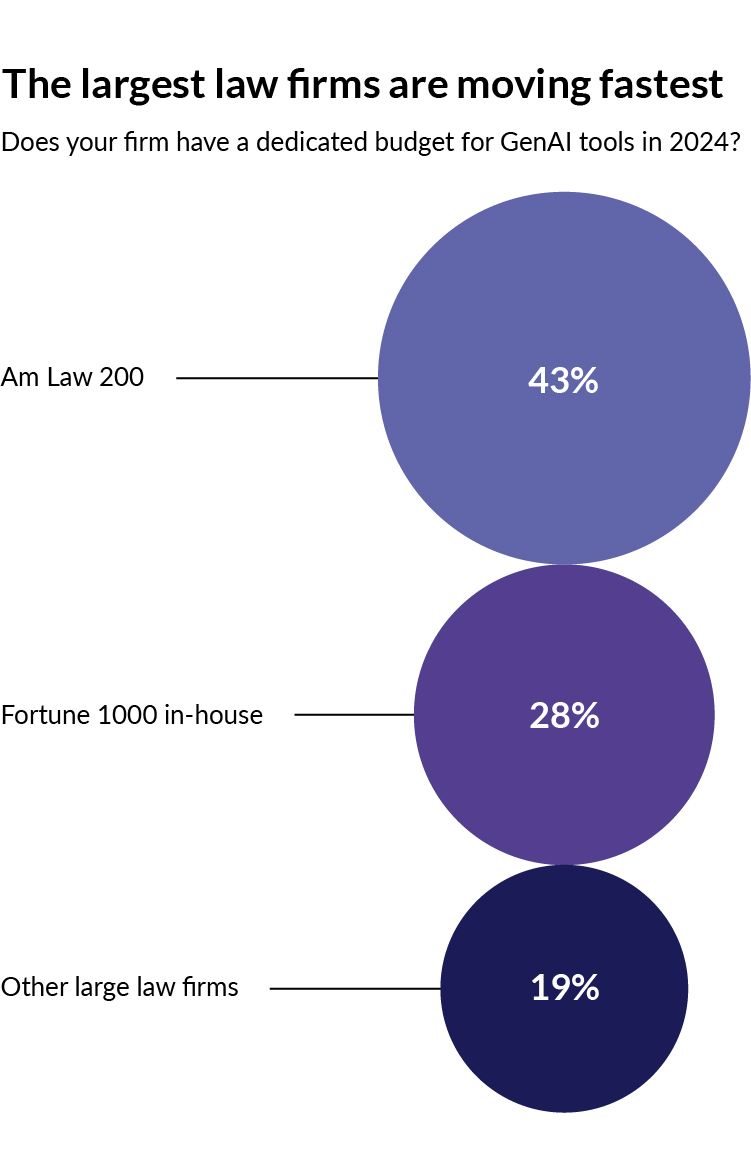

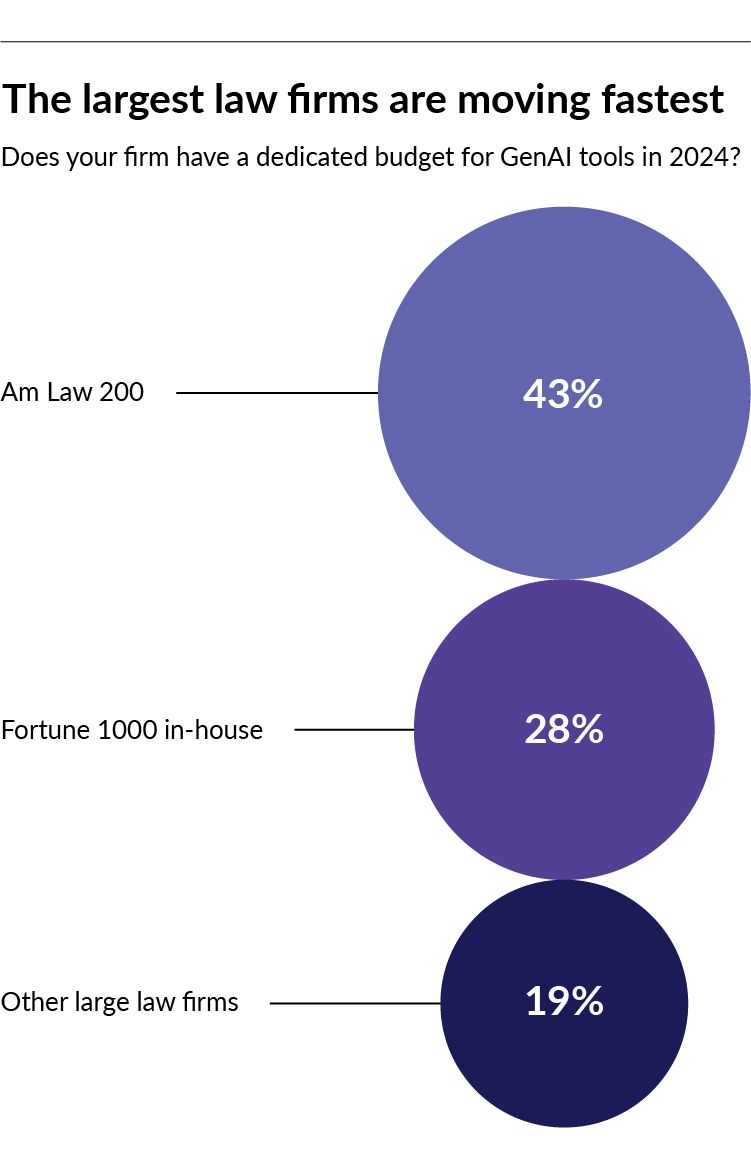

The survey shows that the largest law firms are moving the fastest, with 43% of firms in the Am Law 200 setting aside a dedicated budget for GenAI tools. By contrast, only 19% of other firms have a GenAI budget.

But that doesn’t mean those larger firms are rushing blindly into deploying new GenAI tools. Top firms are studying appropriate use cases first and spending time figuring out how the tech could be applied to their practice areas and workflows.

“We have seen firms do wide-scale documentation of various use case opportunities, and then isolating opportunities where the value is perceived to be the highest,” says Pfeifer. “We see organizations that go through a rigorous review first generally do better later when they actually implement the technology.”

Successful firms are also focusing on small, quick experimentation in much the same way as software development occurs, Pfeifer adds.

“It is very helpful to start with small increments rather than wide-scale application of the technology so that one can learn from what goes well and learn where adjustments need to be made,” he says.

Firms also need to include their attorneys on AI programs under consideration, to ensure the tech is relevant to them and can add value to their day-to-day working practices.

“Our technology team has really been engaged with the attorneys on the introduction of generative AI tools and determining which ones make sense for the firm to invest in and which ones don’t make sense,” says Cohen.

Some firms like Reed Smith are running multiple pilot projects to test different AI tools to see which ones end up working best in practice for clients and internally. “We’re taking a ‘thoughtfully progressive’ approach to validate the maturity and usefulness of each system versus locking on to only one vendor’s AI technology,” says Metzger. “We don’t want to place all of our bets in one basket at this point.”

One approach some firms are taking to build trust is to identify small pockets of individuals within the firm who are likely to be enthusiastic adopters and who can test the technology—an approach that Steptoe & Johnson is taking.

“We also message this in practice group meetings with our department heads and our executive committee, and then we have those trusted leaders talk to individuals in their groups to build that trust, because a lot of it has to come from top,” says Ford. “We have a multifaceted approach to building trust at the firm.”

As with all legal technology programs, not all lawyers will be instant converts—some may even be openly resistant. Yet Cohen believes it is not necessary to win unanimous support to introduce and use GenAI tools. Just as there are now only a few attorneys left who still do dictation, those resistant to GenAI will naturally reduce in number over time. However, GenAI tools do need to be integrated seamlessly into daily workflows and simple to operate or they will likely remain unused and underused, Cohen says.

“There might be an enormous amount of features that would be super useful for most users, but they may never have any idea that they exist,” he says. “That’s part of the problem with the introduction of generative AI tools—they have all kinds of capabilities, but it’s hard to get those in front of people in a way that makes it easy for them to use.”

To avoid this problem, firms need to have a coherent view of how AI can be used across the firm so that all stakeholders are aware of what is going on and the benefits it can bring.

“We’ve been looking at GenAI and examining it and identifying risks and opportunities and how to protect ourselves and our clients, but we're doing it holistically and looking at it from a firm-wide view, rather than allowing different camps in the law firm to approach these issues individually,” says the Head of Knowledge Management at a top 150 US law firm.

Forward-thinking law firms are not waiting to see how GenAI could be adopted in their practices—they are already experimenting with its ability to change the way they work and deliver legal services to clients.

The survey shows that the largest law firms are moving the fastest, with 43% of firms in the Am Law 200 setting aside a dedicated budget for GenAI tools. By contrast, only 19% of other firms have a GenAI budget.

But that doesn’t mean those larger firms are rushing blindly into deploying new GenAI tools. Top firms are studying appropriate use cases first and spending time figuring out how the tech could be applied to their practice areas and workflows.

“We have seen firms do wide-scale documentation of various use case opportunities, and then isolating opportunities where the value is perceived to be the highest,” says Pfeifer. “We see organizations that go through a rigorous review first generally do better later when they actually implement the technology.”

Successful firms are also focusing on small, quick experimentation in much the same way as software development occurs, Pfeifer adds.

“It is very helpful to start with small increments rather than wide-scale application of the technology so that one can learn from what goes well and learn where adjustments need to be made,” he says.

Firms also need to include their attorneys on AI programs under consideration, to ensure the tech is relevant to them and can add value to their day-to-day working practices.

“Our technology team has really been engaged with the attorneys on the introduction of generative AI tools and determining which ones make sense for the firm to invest in and which ones don’t make sense,” says Cohen.

Some firms like Reed Smith are running multiple pilot projects to test different AI tools to see which ones end up working best in practice for clients and internally. “We’re taking a ‘thoughtfully progressive’ approach to validate the maturity and usefulness of each system versus locking on to only one vendor’s AI technology,” says Metzger. “We don’t want to place all of our bets in one basket at this point.”

One approach some firms are taking to build trust is to identify small pockets of individuals within the firm who are likely to be enthusiastic adopters and who can test the technology—an approach that Steptoe & Johnson is taking.

“We also message this in practice group meetings with our department heads and our executive committee, and then we have those trusted leaders talk to individuals in their groups to build that trust, because a lot of it has to come from top,” says Ford. “We have a multifaceted approach to building trust at the firm.”

As with all legal technology programs, not all lawyers will be instant converts—some may even be openly resistant. Yet Cohen believes it is not necessary to win unanimous support to introduce and use GenAI tools. Just as there are now only a few attorneys left who still do dictation, those resistant to GenAI will naturally reduce in number over time. However, GenAI tools do need to be integrated seamlessly into daily workflows and simple to operate or they will likely remain unused and underused, Cohen says.

“There might be an enormous amount of features that would be super useful for most users, but they may never have any idea that they exist,” he says. “That’s part of the problem with the introduction of generative AI tools—they have all kinds of capabilities, but it’s hard to get those in front of people in a way that makes it easy for them to use.”

To avoid this problem, firms need to have a coherent view of how AI can be used across the firm so that all stakeholders are aware of what is going on and the benefits it can bring.

“We’ve been looking at GenAI and examining it and identifying risks and opportunities and how to protect ourselves and our clients, but we're doing it holistically and looking at it from a firm-wide view, rather than allowing different camps in the law firm to approach these issues individually,” says the Head of Knowledge Management at a top 150 US law firm.

Workflow, skills and culture

The biggest benefits will come to lawyers who rethink how they work

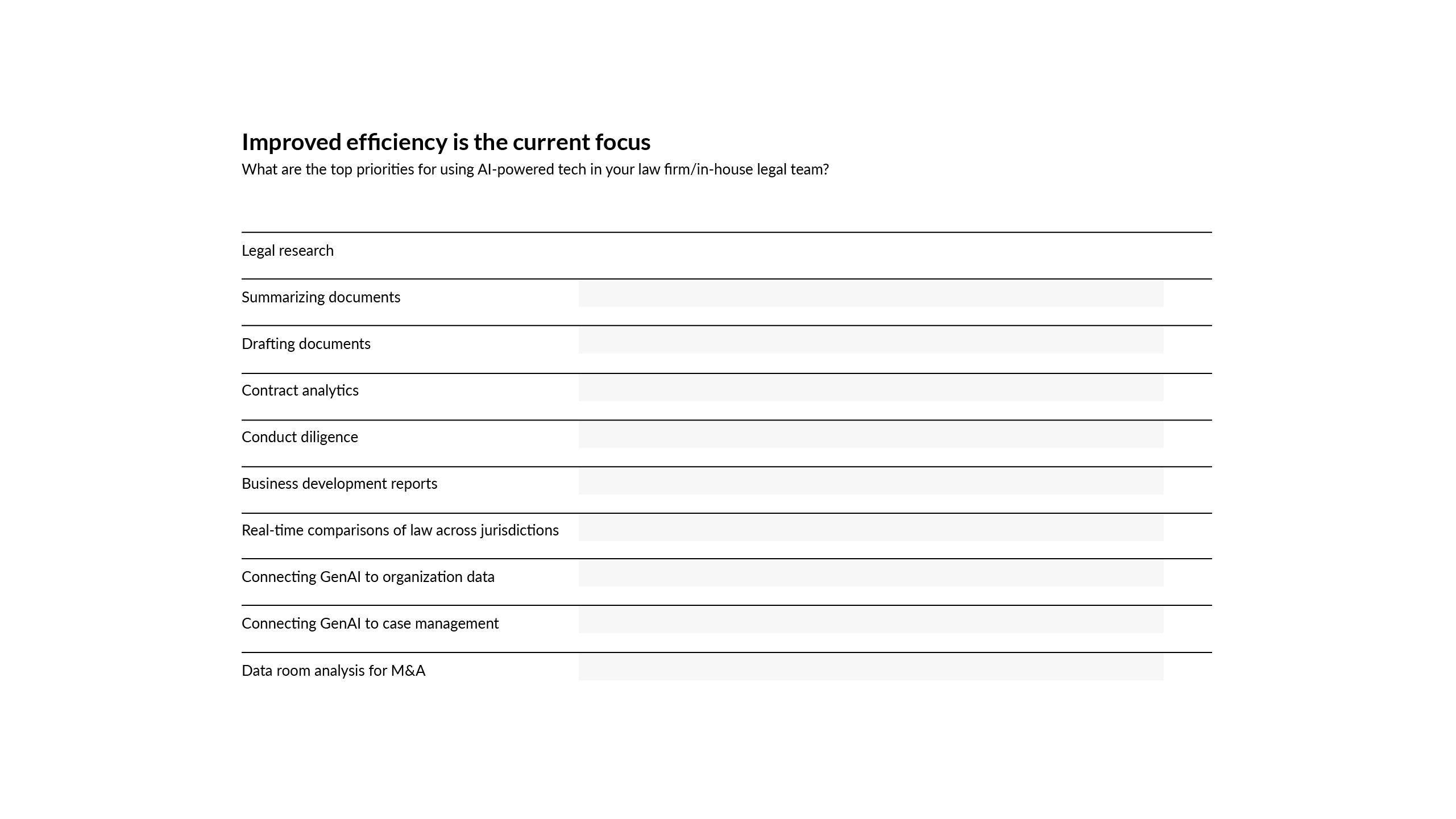

The transformative nature of GenAI technology is not only going to change the way lawyers work—it is also going to require new skills and new ways of thinking. Management consultancy BCG, for example, says that introducing GenAI should follow what they call a 10/20/70 rule—10% of effort should be spent on getting the data right, 20% on AI tech and the rest needs to be dedicated to transforming people and business models.

“A big part of my job is change management and trying to work through adoption and what that means,” says Steptoe & Johnson’s Ford. “GenAI is a huge leap. It's like nothing that we've seen before—it's going to change absolutely everything. We’ve been talking about AI for a while, but most of that’s machine learning. This is a completely different animal.”

Firms therefore need to have a robust change management plan in place if they want to successfully adopt GenAI technology and be certain that attorneys are using it effectively.

“Firms need to ensure that the right training and support for a cultural shift is in place to help professionals who are making the journey to adopt and use these technologies in their day-to-day practice,” says Pfeifer.

This will often require a degree of hand-holding given that lawyers will be laser-focused on client matters and hitting their billable hours targets rather than having to spend time changing the way they work.

“If left to manage on their own, the attorneys won’t succeed, largely due to their hectic schedules which leave little room for adopting new tech” Geovanes explains.

“That’s why we’ve committed to what we term as foundational training to cover the basics, followed by workshop training a week later that focuses on their specific use cases and aids in their understanding of the tool’s capabilities.”

McGuireWoods has also consulted with its professional liability insurer to put in place mandatory ethics and responsibility training for all its lawyers on GenAI use.

A big part of this foundational training involves being able to write effective questions for GenAI to answer—if the prompts they enter aren’t well crafted, then they may not get the information they are seeking.

“The most exciting thing with GenAI is that interaction becomes conversational. But to become conversational, you have to become more skilled at interacting and prompting the service to give you the information you need,” says Pfeifer. “There is a significant focus on prompt engineering training and prompt interaction training so individuals can get effective answers and interactions with a large language model service.”

While all lawyers may need to attain a basic level of AI skills, it is unlikely that all lawyers will need to become AI experts. Just as firms already have a mix of skill sets within their workforce, AI will simply become another area that some lawyers will be stronger at than others.

“Firms recognize that certain people have certain skills that others do not,” says Much Shelist’s Posner. “We know that certain lawyers in the firm are just really great at legal research and some are really great at drafting. And you recognize who has what skills and you bring them into a matter when you need that skill because it's efficient. I don't think that's going to change.”

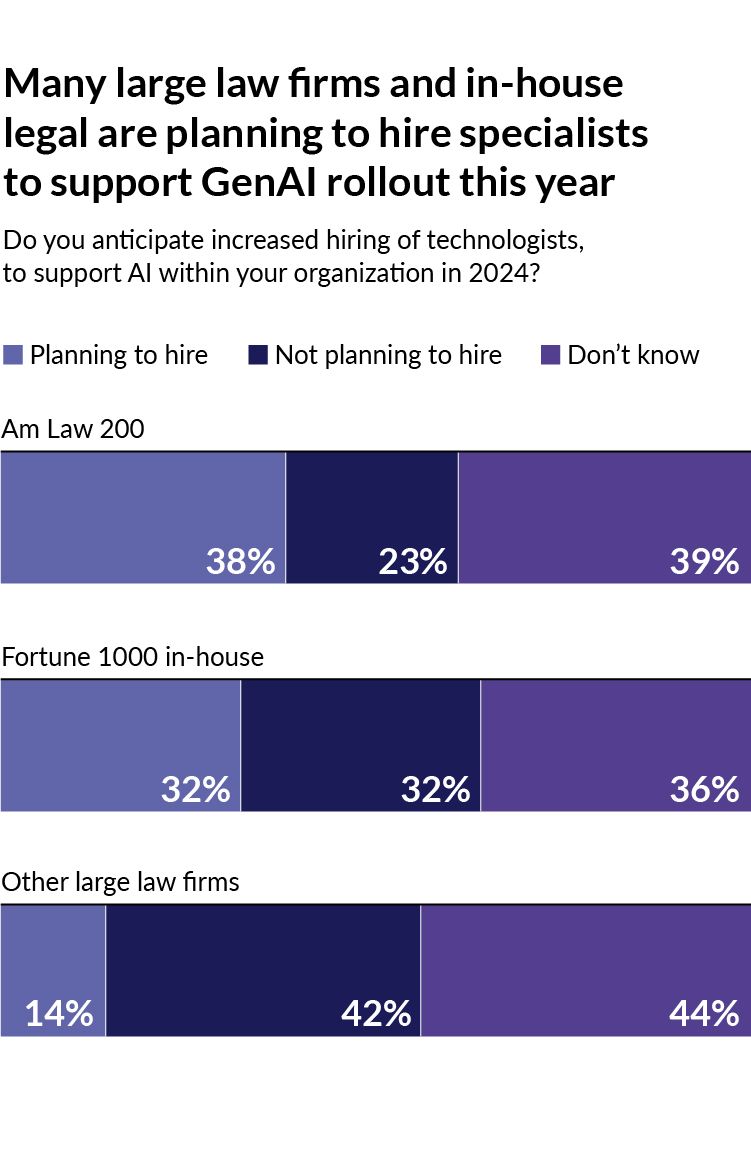

However, GenAI adoption may require entirely new skill sets at firms. More than a third of Am Law 200 firms (38%) are planning to hire technologists and AI specialists this year to help with GenAI initiatives.

“We see law firms exploring the required resources and skills needed to power these capabilities within their own organizations,” says Pfeifer. “That may mean adding people that are proficient at various data labeling activities or data science work that’s necessary to drive the right kind of outcomes from interaction with a generative AI toolset.”

As an alternative, some firms are exploring partnerships with third parties to ensure they have the right expertise on hand without having to hire their own specialists.

As well as training lawyers to use GenAI, firms will also likely train the machines, so that GenAI operates as a personal assistant that lawyers can interact with as if it was a colleague.

“Our vision at LexisNexis is that AI services become a trusted assistant to the individual, and knows how an individual works, how they like to write, and how they like to extract information,” says Pfeifer. “Services as they’re developed over time should feel more human-like in their interaction.”

The transformative nature of GenAI technology is not only going to change the way lawyers work—it is also going to require new skills and new ways of thinking. Management consultancy BCG, for example, says that introducing GenAI should follow what they call a 10/20/70 rule—10% of effort should be spent on getting the data right, 20% on AI tech and the rest needs to be dedicated to transforming people and business models.

“A big part of my job is change management and trying to work through adoption and what that means,” says Steptoe & Johnson’s Ford. “GenAI is a huge leap.

It's like nothing that we've seen before—it's going to change absolutely everything.

We’ve been talking about AI for a while, but most of that’s machine learning.

This is a completely different animal.”

Firms therefore need to have a robust change management plan in place if they want to successfully adopt GenAI technology and be certain that attorneys are using it effectively.

“Firms need to ensure that the right training and support for a cultural shift is in place to help professionals who are making the journey to adopt and use these technologies in their day-to-day practice,” says Pfeifer.

This will often require a degree of hand-holding given that lawyers will be laser-focused on client matters and hitting their billable hours targets rather than having to spend time changing the way they work.

“If left to manage on their own, the attorneys won’t succeed, largely due to their hectic schedules which leave little room for adopting new tech” Geovanes explains.

“That’s why we’ve committed to what we term as foundational training to cover the basics, followed by workshop training a week later that focuses on their specific use cases and aids in their understanding of the tool’s capabilities.”

McGuireWoods has also consulted with its professional liability insurer to put in place mandatory ethics and responsibility training for all its lawyers on GenAI use.

A big part of this foundational training involves being able to write effective questions for GenAI to answer—if the prompts they enter aren’t well crafted, then they may not get the information they are seeking.

“The most exciting thing with GenAI is that interaction becomes conversational. But to become conversational, you have to become more skilled at interacting and prompting the service to give you the information you need,” says Pfeifer. “There is a significant focus on prompt engineering training and prompt interaction training so individuals can get effective answers and interactions with a large language model service.”

While all lawyers may need to attain a basic level of AI skills, it is unlikely that all lawyers will need to become AI experts. Just as firms already have a mix of skill sets within their workforce, AI will simply become another area that some lawyers will be stronger at than others.

“Firms recognize that certain people have certain skills that others do not,” says Much Shelist’s Posner. “We know that certain lawyers in the firm are just really great at legal research and some are really great at drafting. And you recognize who has what skills and you bring them into a matter when you need that skill because it's efficient. I don't think that's going to change.”

However, GenAI adoption may require entirely new skill sets at firms. More than a third of Am Law 200 firms (38%) are planning to hire technologists and AI specialists this year to help with GenAI initiatives.

“We see law firms exploring the required resources and skills needed to power these capabilities within their own organizations,” says Pfeifer. “That may mean adding people that are proficient at various data labeling activities or data science work that’s necessary to drive the right kind of outcomes from interaction with a generative AI toolset.”

As an alternative, some firms are exploring partnerships with third parties to ensure they have the right expertise on hand without having to hire their own specialists.

As well as training lawyers to use GenAI, firms will also likely train the machines, so that GenAI operates as a personal assistant that lawyers can interact with as if it was a colleague.

“Our vision at LexisNexis is that AI services become a trusted assistant to the individual, and knows how an individual works, how they like to write, and how they like to extract information,” says Pfeifer. “Services as they’re developed over time should feel more human-like in their interaction.”

Conclusion: making trust real

Acceptance of GenAI may take time but will eventually become the ‘new normal’

For firms to move from the experimentation stage to widespread GenAI adoption, they need to build trust across their organizations. Right now, almost half of lawyers are on the fence (40%) when it comes to trusting GenAI for legal work. Yet getting everyone comfortable with boarding the AI boat might not require absolute confidence in the tools; it may simply involve getting risk to an “acceptable tolerance level”, as AWS associate general counsel Justin Grad says.

The starting point for that is addressing concerns about the safety of client data. This means carrying out appropriate due diligence when selecting third-party tech providers for GenAI tools, and reviewing their security infrastructure to ensure it meets the high standards of confidentiality and legal privilege that firms and their clients expect, Pfeifer says.

“Security and confidentiality are critical and law firms need to be smart about the partnerships they strike, and the technology configuration deployed in their organizations,” says Pfeifer. “There’s no shortcut to that.”

Firms may also just need to be patient in building trust rather than expecting attitudes to change overnight.

“GenAI will provide value and it will be a game-changer in the industry. I just don't think we're going to get there in 18 to 24 months,” says Metzger.

For lawyers to gain trust in the technology, some say it is all about getting as much hands-on experience with GenAI tools as possible and enabling them to see the benefits up close for themselves.

“Ideally those early experiences create a foundation an individual can build skill and capability on top of,” says Pfeifer. “Leading organizations are helping their lawyers through that process by supporting training and other activities that help make that transition as smooth as possible.”

Metzger says it may quickly become clear that partners who use GenAI tools will be more impactful, whether measured by speed, impact, or efficiency, compared to their colleagues who persist with manual processes. At some point in the future, those partners may demand a “bigger share of the profitability pie” because they can provide more value to both the firm and clients.

Ultimately, lawyers may realize they have no choice but to board the AI boat when they look around and see no one else is left on the dock.

“People will just recognize that this is the way of the world,” says the Head of Knowledge Management at a top 150 US firm. “You can't escape it, GenAI is going to become the new normal and people are going to need to get comfortable with it.”

GenAI, then, has the potential to change how law firms operate and how attorneys do their jobs. By taking steps to build trust, lawyers will see the benefits of GenAI—from enabling them to work more efficiently to opening new service lines and revenue streams. If done effectively, GenAI can help firms become more competitive, drive profitability and ensure they remain relevant in an increasingly digital world.

For firms to move from the experimentation stage to widespread GenAI adoption, they need to build trust across their organizations. Right now, almost half of lawyers are on the fence (40%) when it comes to trusting GenAI for legal work. Yet getting everyone comfortable with boarding the AI boat might not require absolute confidence in the tools; it may simply involve getting risk to an “acceptable tolerance level”, as AWS associate general counsel Justin Grad says.

The starting point for that is addressing concerns about the safety of client data. This means carrying out appropriate due diligence when selecting third-party tech providers for GenAI tools, and reviewing their security infrastructure to ensure it meets the high standards of confidentiality and legal privilege that firms and their clients expect, Pfeifer says.

“Security and confidentiality are critical and law firms need to be smart about the partnerships they strike, and the technology configuration deployed in their organizations,” says Pfeifer. “There’s no shortcut to that.”

Firms may also just need to be patient in building trust rather than expecting attitudes to change overnight.

“GenAI will provide value and it will be a game-changer in the industry. I just don't think we're going to get there in 18 to 24 months,” says Metzger.

For lawyers to gain trust in the technology, some say it is all about getting as much hands-on experience with GenAI tools as possible and enabling them to see the benefits up close for themselves.

“Ideally those early experiences create a foundation an individual can build skill and capability on top of,” says Pfeifer. “Leading organizations are helping their lawyers through that process by supporting training and other activities that help make that transition as smooth as possible.”

Metzger says it may quickly become clear that partners who use GenAI tools will be more impactful, whether measured by speed, impact, or efficiency, compared to their colleagues who persist with manual processes. At some point in the future, those partners may demand a “bigger share of the profitability pie” because they can provide more value to both the firm and clients.

Ultimately, lawyers may realize they have no choice but to board the AI boat when they look around and see no one else is left on the dock.

“People will just recognize that this is the way of the world,” says the Head of Knowledge Management at a top 150 US firm. “You can't escape it, GenAI is going to become the new normal and people are going to need to get comfortable with it.”

GenAI, then, has the potential to change how law firms operate and how attorneys do their jobs. By taking steps to build trust, lawyers will see the benefits of GenAI—from enabling them to work more efficiently to opening new service lines and revenue streams. If done effectively, GenAI can help firms become more competitive, drive profitability and ensure they remain relevant in an increasingly digital world.

Survey overview

The report used data from the LexisNexis Investing in Legal Innovation survey, which surveyed 266 leaders from large law firms and Fortune 1000 companies about their plans around generative AI adoption and investment.

To find out more, visit The Future of Legal GenAI page or download the second report in this series, GenAI in Law: Unlocking New Revenues.